The Anatomy of Node: Crafting a Runtime

Part I - Getting started with V8

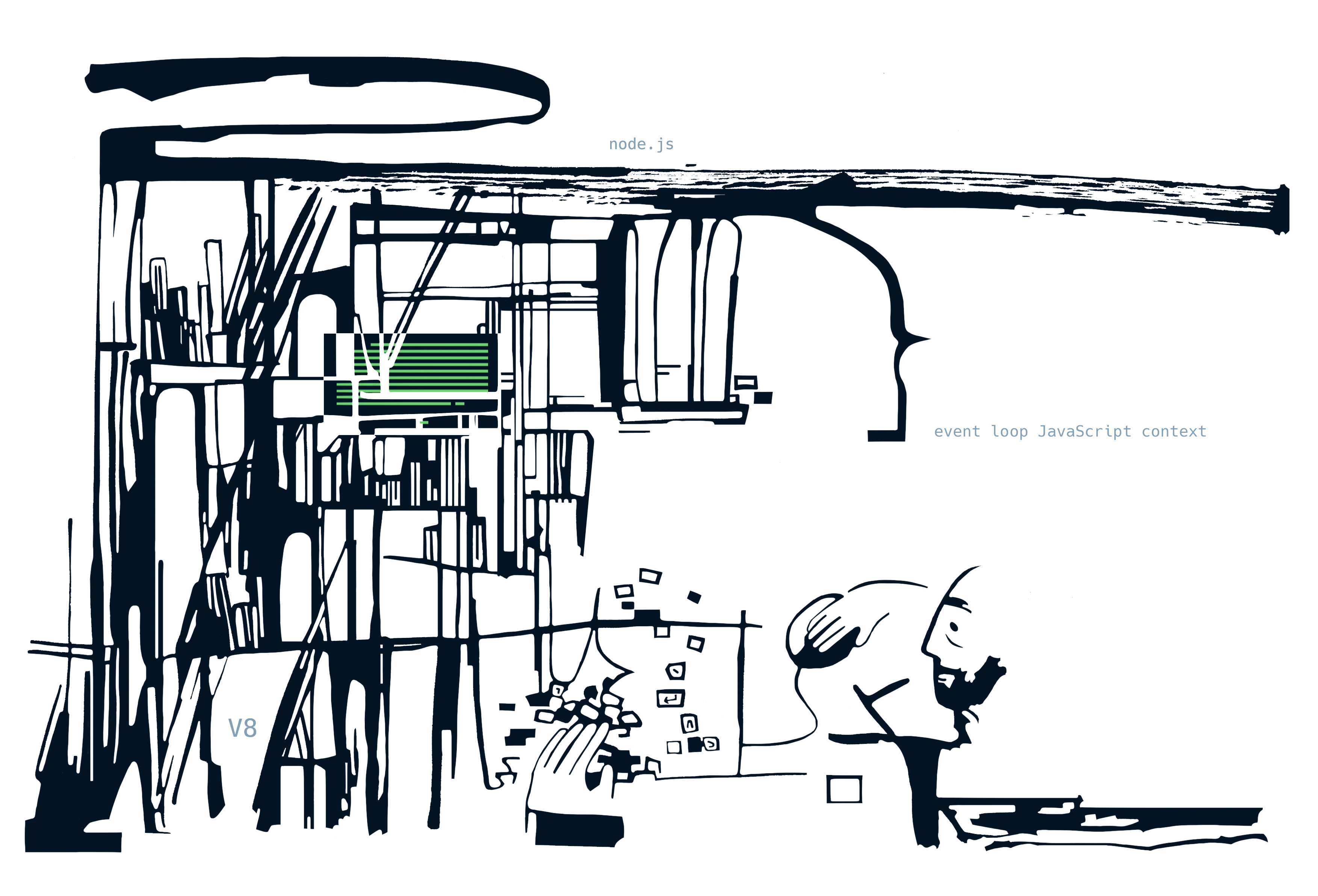

After nearly a decade in software engineering, Node.js has been a constant presence in my work. Yet, despite using it extensively, I feel like I only have a vague idea of how it functions internally. Of course, I've heard the terms "libuv" and "V8" and I know what an event loop is, but I don't have a coherent mental model of how it all comes together.

Recently, I had a cathartic experience of reading through Linux source code with a guide book. Suddenly, so many things start clicking into place, the magic and hand-waving disappear and become concrete code. I'd like to recreate this experience for Node.js in this blog series.

We will build our own little JavaScript runtime from scratch, starting with compiling V8 and going from there. We will study Node's source code to see how it ticks inside, consider the ECMAScript spec for answers and think through the same design decisions Node authors faced. By the end of the series, we will understand exactly how it all comes together, and we will have a fully working HTTP server running in JavaScript that we will have built ourselves

Revving up the engine

To get started, we'd like to be able to run JavaScript. It just so happens that a few companies have been obsessing over running JavaScript as fast as humanly possible as if their life depended on it. The top choices are JavaScript Core from Apple and V8 from Google, and we'll deny ourselves the pleasure of contrarianism and stick to V8.

Most of the steps to get started with V8 are described in the embedding V8 guide.

You need to git clone Google's cli tool depot_tools and add it to the system path. Once

added, we can use the tools to fetch the source.

mkdir ~/v8

cd ~/v8

fetch v8

cd v8The whole repository weighs 5.6 GB after fetch is done, so it might take a while on a slower connection.

Once fetched, the repo is in detached HEAD state. We'd like to build a stable version of the engine, so we will

have to check it out. The released versions in V8 repo are saved on branch-heads/${TAG_VERSION} tags.

I initially attempted to use the same version that current version of Node used (23.7.0 for Node and 12.9

version of V8). But compiled binaries crashed with segfaults during dynamic libraries initialization.

So I resolved to use a version mentioned in the embedding guide. That was 13.1. So to check it out:

git checkout branch-heads/13.1Once checked out, sync all the Git modules:

gclient syncBefore we start compiling, we need to create a build configuration:

tools/dev/v8gen.py x64.release.sampleThis creates a build configuration inside v8/out.gn/args.gn file.

Once the build config is generated, we can start the compilation:

ninja -C out.gn/x64.release.sample v8_monolithWhat we are building here is a v8_monolith target that is specifically used for embedding as it creates a single

static library, compared to a swath of multiple shared libraries generated by the default configuration. sample

configuration is just one of the default configs in V8 that are available as starting points to be customized later.

release as opposed to debug generates a build without debug symbols. And x64 is of course the architecture.

The build process is memory heavy. I gave my VM 10 gigs of RAM and 10 gigs of swap, and it took 50 minutes to

compile and still ran out of memory close to the end and ultimately failed. Thankfully, Ninja can pick up from a

failed job and once restarted, it finished the rest of the tasks. There's a way to reduce memory consumption of the

build using the -j argument of Ninja and limit the number of jobs running in parallel, but I didn't end up using

it:

ninja -C out.gn/x64.release.sample v8_monolith -j 4 # limit ninja to 4 parallel jobsOne more thing we need to do to be able to run our own code is to copy icudtl.dat to the location of our binary.

ICU stands for International Components for Unicode,

and it provides character encoding, locale-specific behavior, and other internationalization features that V8

relies on. We can copy it from the V8 build folder:

cp out.gn/x64.release.sample/icudtl.dat .Taking it for a spin

Okay, we now have built the mighty V8. Let's now whip up a small C++ app that will allow us to run JavaScript files with it.

To start, we will just take Google's own hello world example with only a few modifications for reading a script from the filesystem. The whole source can be viewed on GitHub, but here we will discuss the meaty part:

v8::Isolate::CreateParams create_params;

create_params.array_buffer_allocator =

v8::ArrayBuffer::Allocator::NewDefaultAllocator();

v8::Isolate* isolate = v8::Isolate::New(create_params);

{

v8::Isolate::Scope isolate_scope(isolate);

v8::HandleScope handle_scope(isolate);

v8::Local<v8::Context> context = v8::Context::New(isolate);

v8::Context::Scope context_scope(context);

{

const std::optional<std::string> js_code = ReadFile(argv[1]);

if (!js_code) {

return 1;

}

v8::Local<v8::String> source =

v8::String::NewFromUtf8(

isolate,

js_code.value().c_str(),

v8::NewStringType::kNormal).ToLocalChecked();

v8::Local<v8::Script> script =

v8::Script::Compile(context, source).ToLocalChecked();

v8::Local<v8::Value> result = script->Run(context).ToLocalChecked();

v8::String::Utf8Value utf8(isolate, result);

printf("%s\n", *utf8);

}

}

We can see here that first an Isolate is created, after that a Context using the Isolate. The context is then used

to compile and execute the script.

So what are the Isolate and the Context?

Well, the Isolate, as the name suggests, is an isolated instance

of the JavaScript VM. It is the meat of JS execution in V8.

It contains its own JS heap, garbage collector,

compiler instance and other core runtime components.

Multiple Isolates can run in the same process without

interfering with each other.

What's the Context? Context closely corresponds

to what the ECMAScript specification calls a Realm.

It provides the root object of JS execution and necessary built-in objects, like Object, Array, Promise, etc.

When JS code is executed, it always runs within a Context, which determines the functions and global

variables available to the code.

A single Isolate may contain multiple Contexts.

Does Node.js do the same as our little hello-world? Well, yes and no. Let's look into Node's source and see if we can trace similarities. Here I will be using the master branch at the time of writing, some details of the code may change at a later date.

If you follow node.cc in

src folder, you will find NodeMainInstance class used inside StartInternal function:

// node/src/node.cc

static ExitCode StartInternal(int argc, char** argv) {

// Other setup stuff

// ....

NodeMainInstance main_instance(snapshot_data,

uv_default_loop(),

per_process::v8_platform.Platform(),

result->args(),

result->exec_args());

return main_instance.Run();

}Inside NodeMainInstance constructor the same Isolate we're using here is initialized in a similar fashion:

// node/src/node_main_instance.cc

NodeMainInstance::NodeMainInstance(const SnapshotData* snapshot_data,

uv_loop_t* event_loop,

MultiIsolatePlatform* platform,

const std::vector<std::string>& args,

const std::vector<std::string>& exec_args)

: args_(args),

exec_args_(exec_args),

array_buffer_allocator_(ArrayBufferAllocator::Create()),

isolate_(nullptr),

platform_(platform),

isolate_data_(),

isolate_params_(std::make_unique<Isolate::CreateParams>()),

snapshot_data_(snapshot_data) {

isolate_params_->array_buffer_allocator = array_buffer_allocator_.get();

// Isolate created here.

isolate_ =

NewIsolate(isolate_params_.get(), event_loop, platform, snapshot_data);

// rest of the functionBut Context initialization is a bit trickier.

To save time, Node uses a pre-generated snapshot of V8 Context to

initialize the environment.

We can see it in CreateMainEnvironment function, after the snapshot_data check:

// node/src/node_main_instance.cc

NodeMainInstance::CreateMainEnvironment(ExitCode* exit_code) {

// beginning of function omitted for clarity

Local<Context> context;

DeleteFnPtr<Environment, FreeEnvironment> env;

if (snapshot_data_ != nullptr) {

// Create environment from snapshot

env.reset(CreateEnvironment(isolate_data_.get(),

Local<Context>(), //pass empty ccontext

args_,

exec_args_));

// openssl initialisation omitted for clarity

} else {

// build a new Context from scratch

context = NewContext(isolate_);

CHECK(!context.IsEmpty());

Context::Scope context_scope(context);

env.reset(

CreateEnvironment(isolate_data_.get(), context, args_, exec_args_));

}

return env;

}

In case there's snapshot data available, it passes an empty Context into CreateEnvironment function.

CreateEnvironment is defined in node/src/api/environment.cc:

//node/src/api/environment.cc

Environment* CreateEnvironment(

IsolateData* isolate_data,

Local<Context> context,

const std::vector<std::string>& args,

const std::vector<std::string>& exec_args,

EnvironmentFlags::Flags flags,

ThreadId thread_id,

std::unique_ptr<InspectorParentHandle> inspector_parent_handle) {

Isolate* isolate = isolate_data->isolate();

Isolate::Scope isolate_scope(isolate);

HandleScope handle_scope(isolate);

const bool use_snapshot = context.IsEmpty();

// environment initialisation omitted for clarity

// initialize context from snapshot

if (use_snapshot) {

context = Context::FromSnapshot(isolate,

SnapshotData::kNodeMainContextIndex,

v8::DeserializeInternalFieldsCallback(

DeserializeNodeInternalFields, env),

nullptr,

MaybeLocal<Value>(),

nullptr,

v8::DeserializeContextDataCallback(

DeserializeNodeContextData, env))

.ToLocalChecked();

CHECK(!context.IsEmpty());

Context::Scope context_scope(context);

}

// rest of the functionThe function checks if the passed context is empty, and if it is - retrieves it from the snapshot data.

It makes sense to initialize the context from a static snapshot since at the start of execution, the environment

is always the same. If the snapshot is not available, Node initializes a new Context with an Isolate just

like we did.

Are we done already?

We've got ourselves a binary that takes in a JavaScript file and runs it. Have we actually created our own Node.js alternative? Will VCs barge into my door with bags full of money begging to fund this new revolutionary technology? I don't know, let's see what works.

Simple things work as expected. You can define functions, use operators and use built-ins.

function test_builtin (a, b) {

return Math.floor(a/b);

}

test_builtin(5, 2);

// returns 2The built-ins are rather limited. Printing the contents of globalThis using this script.

JSON.stringify(Object.getOwnPropertyNames(globalThis));returns the following list

["Object","Function","Array","Number","parseFloat","parseInt","Infinity","NaN",

"undefined","Boolean","String","Symbol","Date","Promise","RegExp","Error","AggregateError",

"EvalError","RangeError","ReferenceError","SyntaxError","TypeError","URIError","globalThis",

"JSON","Math","Intl","ArrayBuffer","Atomics","Uint8Array","Int8Array","Uint16Array","Int16Array",

"Uint32Array","Int32Array","Float32Array","Float64Array","Uint8ClampedArray","BigUint64Array",

"BigInt64Array","DataView","Map","BigInt","Set","WeakMap","WeakSet","Proxy","Reflect",

"FinalizationRegistry","WeakRef","decodeURI","decodeURIComponent","encodeURI",

"encodeURIComponent","escape","unescape","eval","isFinite","isNaN","console","Iterator",

"SharedArrayBuffer","WebAssembly"]Contrary to my expectations, console is actually here!

However, using console.log inside the script does not produce

any output currently.

This is because while V8 provides the console object structure,

it's the embedding application's responsibility

to bind these functions to actual output mechanisms,

like writing to the terminal.

We'll skip implementing this binding for now.

The list of built-ins available in this raw V8 environment is much shorter than Node's. Running the same script in Node, for comparison, we get a list about two times longer.

Check the full list of Node's built-ins

["Object","Function","Array","Number","parseFloat","parseInt","Infinity","NaN",

"undefined","Boolean","String","Symbol","Date","Promise","RegExp","Error","AggregateError",

"EvalError","RangeError","ReferenceError","SyntaxError","TypeError","URIError","globalThis",

"JSON","Math","Intl","ArrayBuffer","Uint8Array","Int8Array","Uint16Array","Int16Array",

"Uint32Array","Int32Array","Float32Array","Float64Array","Uint8ClampedArray","BigUint64Array",

"BigInt64Array","DataView","Map","BigInt","Set","WeakMap","WeakSet","Proxy","Reflect",

"FinalizationRegistry","WeakRef","decodeURI","decodeURIComponent","encodeURI",

"encodeURIComponent","escape","unescape","eval","isFinite","isNaN","console",

"SharedArrayBuffer","Atomics","WebAssembly","process","global","Buffer","queueMicrotask",

"clearImmediate","setImmediate","structuredClone","URL","URLSearchParams","DOMException",

"clearInterval","clearTimeout","setInterval","setTimeout","BroadcastChannel","AbortController",

"AbortSignal","Event","EventTarget","MessageChannel","MessagePort","MessageEvent","atob","btoa",

"Blob","Performance","performance","TextEncoder","TextDecoder","TransformStream",

"TransformStreamDefaultController","WritableStream","WritableStreamDefaultController",

"WritableStreamDefaultWriter","ReadableStream","ReadableStreamDefaultReader","ReadableStreamBYOBReader",

"ReadableStreamBYOBRequest","ReadableByteStreamController","ReadableStreamDefaultController",

"ByteLengthQueuingStrategy","CountQueuingStrategy","TextEncoderStream","TextDecoderStream",

"CompressionStream","DecompressionStream","fetch","FormData","Headers","Request","Response"]Are we async yet?

The state of async in our current setup is rather interesting.

As we've seen previously, Promise built-in is defined

within our environment.

However, we lack any way to interact with the network yet, so no fetch and no

http, and even simple async scheduling with setTimeout is not available for us.

So what asynchronous operations can we perform?

Well, we can resolve promises. This distinction exists because the ECMAScript spec defines Jobs, which translates to microtask queue implemented by V8. Network interactions and timers, on the other hand, are handled as macrotasks, which must be implemented by the embedding environment. Therefore, we can execute microtasks, but macrotasks are currently unavailable in any form.

Let's whip up a script to validate that promises do in fact get resolved:

let message = "Initial value";

Promise.resolve().then(() => {

message = "Promise was processed!";

});

message;Running this script in our current implementation results in the Initial value being printed to terminal.

Why?

Because the micro task queue has not been processed.

This outcome mirrors Node.js

in that the synchronous code execution completes before the microtask queue is processed.

Node.js

automatically processes this queue shortly after.

But since

we have access to internals, we can do things a bit differently.

To actually process the task queue,

let's do a quick and dirty modification to our program.

We will force V8 to process the queue and

output it to our C++ code again:

// PerformMicrotaskCheckpoint runs the queue until it's empty

isolate->PerformMicrotaskCheckpoint();

v8::Local checkSource =

v8::String::NewFromUtf8(isolate, "message;", v8::NewStringType::kNormal).ToLocalChecked();

v8::Local checkScript =

v8::Script::Compile(context, checkSource).ToLocalChecked();

result = checkScript->Run(context).ToLocalChecked();

v8::String::Utf8Value utf8(isolate, result);

printf("%s\n", *utf8);

First we force the isolate to run the queue with PerformMicrotaskCheckpoint. Then we create a new script

meant only to return the value of message to the C++ code. Since we're using the same Context we created

earlier, the variable message is already defined in its lexical scope. So running the microtask queue has updated

it to the new value. Once we run the compiled binary we get "Promise was processed!" as expected.

The full example with processing the queue is available on GitHub in

simple-runner-microtask.cc

The Road Goes Ever On

Let's take stock of what we figured out so far:

- we learned how to build V8 and embed it

- we checked out how Node.js initialization of V8 API compares to ours (and found an interesting optimization)

- we've experimented a bit with running scripts

- we saw with our own eyes the difference between micro and macro tasks (since we can't run the latter yet).

But even though we can now run a JS script ourselves using embedded V8 engine, it's clear that we are far from being able to serve HTTP requests with this thing.

Next time, we will take a step back from JavaScript specifics and think through the problem of building web services from first principles. We will discuss the options we have to handle requests efficiently, what kind of problems does an event loop solve, and, of course, we will build our own simplified version of an event loop.

- Next →

Why devs need DevOps